Banma Co-Pilot

Banma Co-Pilot:

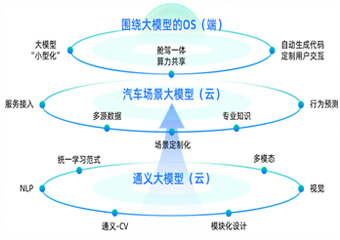

Based on Tongyi large model, it is built for automobile scene, and builds cloud-integrated full-stack AI capability. It has core capabilities such as scene customization, multi-source data, professional knowledge, service access, behavior prediction, etc., realizing personalized experience and truly opening the era of "user-defined car".

Hello Banma-Self Learning 2.0: For complex speech, by integrating the DAMO Academy Tongyi Model, the Banma voice assistant can learn from the Tongyi model in real time and correctly understand the user's intention. When the user says similar expressions again, it can also be directly understood and executed. As for self-learning 1.0, users also need to personally teach the car machine system to learn. But for self-learning 2.0, which integrates large model technology, it can directly learn in real time through large models, and the learning efficiency is greatly improved.

Hello Banma-Free Dialogue: Banma voice assistant grasps a large amount of encyclopedic information by integrating the Tongyi large model of DAMO Academy and combining the data accumulation and training of the vehicle environment. Besides, there are better multi-round dialogue, more logical reasoning, and richer generation capabilities, bringing new interactive experiences to users.

Variety space: one-sentence scene generation, creating a "variety space" that truly meets the needs of users; for examples, the ability of text-to-graphic generation, upgrading from offline to online, from deep sea space to historical future, with unlimited imagination and possibility. The generation ability is more than just a graph; it involves the model reasoning SOA atomic terms, scheduling spatial changes, and integrating five senses.

Your current location:

home

>

Exhibition Hall Online

>

Venue Layout

>

Central Hall

>

Alibaba Group

Your current location:

home

>

Exhibition Hall Online

>

Venue Layout

>

Central Hall

>

Alibaba Group